This post describes a technique that is a combination of two techniques I’ve read in posts written by Simo Ahava and Mark Edmondson, two of the smartest chaps you’ll ever meet.

Simo’s article (linked above in case you don’t know how the interwebs works), describes how to use customTask to syphon GA data into Snowplow.

Very cool.

Mark’s (yes, also linked, no favouritism here!) is an advanced technique to turn GA360 exports into partitioned tables using Cloud Functions.

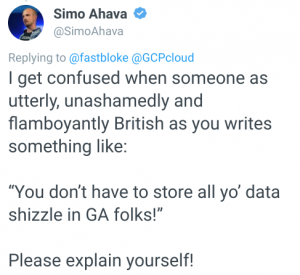

Both excellent reads and highly valuable techniques in their own right. I’m going to cherry pick some super useful parts from each blog to show how you can send your data exhaust in real time to BigQuery. Why? I dropped a flippant line into my last post that piqued Simo’s curiosity:

No, you don’t have to send all your data to GA. Indeed, often, there’s a solid technical or commercial reason not too. Sampling, high cardinality, massive hit volume, PII.

It’s likely you want to collect data now that will join with other datasets down the line. You want to collect the data, possibly in real time, for use in other cloud products (Machine Learning perhaps?) so BigQuery seems like a suitable candidate for storage.

How can this be done?

Here’s How

First, Simo’s technique. In the example here, we’re going to syphon off the entire ga hit payload – just as Simo did. Doesn’t have to be. You could send whatever form url encoded name value pairs you want. Here’s the key part of the customTask GTM variable:

/* syphon */

var endpoint = '{{Syhpon Cloud Function URL}}';

var globalSendTaskName = '_' + model.get('trackingId') + '_sendHitTask';

var originalSendHitTask = window[globalSendTaskName] = window[globalSendTaskName] || model.get('sendHitTask');

model.set('sendHitTask', function(sendModel) {

var payload = sendModel.get('hitPayload');

originalSendHitTask(sendModel);

var request = new XMLHttpRequest();

request.open('POST', endpoint, true);

request.setRequestHeader("Content-type", "application/x-www-form-urlencoded");

request.send(payload);

});

Same as Simo’s so far (Thanks again Simo!). Let’s look at the Cloud Function now.

Cloud Function

It’s a standard HTTP triggered function. Node again but can be ported to another language of choice, even Go if you want…

package.json

Spoiler alert – we’re using google-cloud/logging:

{

"name": "syphon",

"version": "0.0.1",

"dependencies": {

"@google-cloud/logging" : "^4.2"

}

}

index.js

const {Logging} = require('@google-cloud/logging');

exports.syphon = (req, res) => {

res.header('Content-Type','application/json');

res.header('Access-Control-Allow-Origin', '*');

res.header('Access-Control-Allow-Headers', 'Content-Type');

payload = req.body;

if(payload !== null){

const logging = new Logging('sapient-cycle-735');

const log = logging.log('gaSyphon-log');

const METADATA = {

resource: {

type: 'cloud_function',

labels: {

function_name: 'gaSyphon',

}

}

};

const gaData = {

event: 'gaSyphonData',

payload,

};

const syphonEntry = log.entry(METADATA, gaData);

log.write(syphonEntry);

}

res.send("ack");

};

From the source above, you can see the payload is lifted from req.body. If it’s not null, we log it to the gaSyphon log. What’s going on here?

Logging

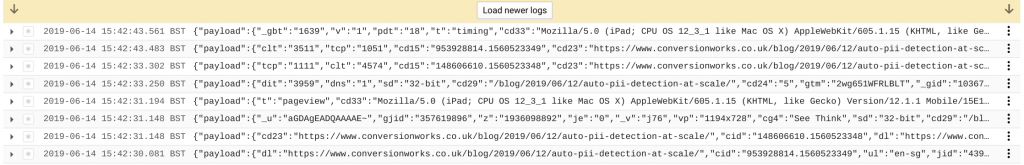

In Stackdriver Logging, we choose to look at the gaSyphon login the cloud functions section.

And we see our data in Stackdriver log format:

All well and good. We could query this but we want it in BQ. Now for the introduction of Mark’s technique. Mark described using an export to send a PubSub message when the GA data for yesterday lands in BQ.

Very handy.

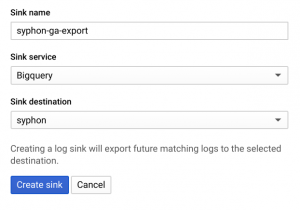

You can create all manner of exports though – we’ll have a BQ export:

Make sure you’ve created the required data set and the data will arrive…1 table per day as per GA:

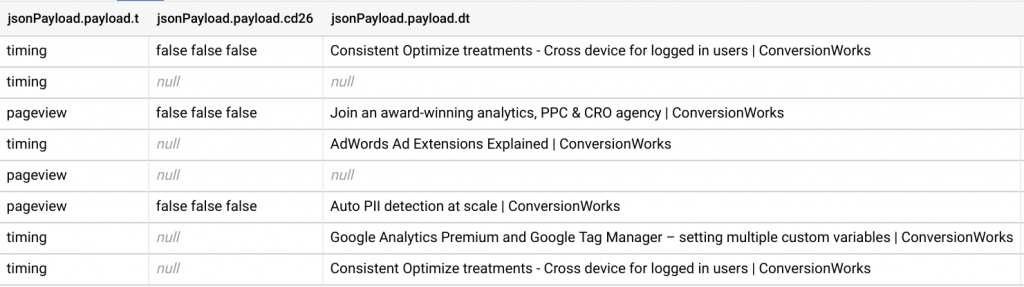

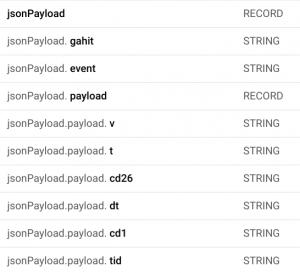

Notice the structure is different to normal GA in BQ – there are no totals for example and the data is in the jsonPayload.payload record:

Interesting side effect – there seems to be more syphon data than GA data – perhaps due to circumventing tracking blockers? Ahem… move along…

Wrap up

So, there you go. Custom data pipeline from client side to BQ in real time. Few moving parts. Nothing terribly original from me and huge help from Simo’s and Mark’s posts. I’m not saying this POC is perfect or course. I’d like to see how cost works out when I use Cloud Run instead of Cloud Functions. I’ll try a Python version to learn more Python.

Any other suggestions? Hopefully this is useful.